Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

A New Technology of Deep Learning for Brain Pathology using EEG

Authors: B Bhaskara Rao, M. Lakshmi Prasanna , K. RaghaLikitha, P. S. Vyshnavi , A. Subhasree

DOI Link: https://doi.org/10.22214/ijraset.2024.60352

Certificate: View Certificate

Abstract

Deep learning and other machine learning technologies have paved the way for the emergence of autonomous life- supporting systems. In this work, we offer an autonomous electroencephalogram (EEG) pathology detection approach based on deep learning. Brain signaling can be impacted by a variety of illnesses. Therefore, the brain impulses captured as EEG signals can be used to determine whether or not an individual has disease. In the proposed approach, the raw EEG data are transformed into a spatiotemporal representation. The spatiotemporal shape of the EEG signals is sent into a convolutional neural network (CNN) as input. Even though there are a lot of researchers in this field, there are a few issues that we addressed and got good outcomes for.

Introduction

I. INTRODUCTION

Advanced systems with feasible, routine applications have become a reality thanks to advancements in machine learning (ML) and artificial intelligence (AI). There has been a dramatic shift in distributed computing and storage due to cloud computing and the IoT. Modern healthcare systems that are considered smart use modern machine learning tools, cloud computing, next-gen communication protocols, and the Internet of Things to provide patients with different kinds of healthcare. The smart healthcare system relies on up-to-the- minute processing and precision. Many research efforts use deep learning because of the great accuracy it produces. Face recognition, voice recognition, computer vision, picture processing, and signal processing are some of the applications. An adequate quantity of data for training the model is a crucial component of deep learning models. Because deep architectures require a large amount of training data, they may not be practical for use in real-time scenarios. Tens of millions of parameters are common in deep learning models, which significantly increase the model's computational complexity. Despite having a relatively thin and deep architecture, the system achieves great precision at a significant computational cost.

Monitoring and measuring EEG signals has shown promise in the detection of neurological disorders and diseases like epilepsy, Alzheimer's, and stroke. A non- invasive and inexpensive way to measure brain activity is with an electroencephalogram (EEG). Processes involved in recording, processing, and analysing EEG signals are time- consuming. Interpreting EEG results requires medical expertise. No clinical system should deploy an automated system designed for real-time EEG processing without first undergoing rigorous training. Methods like deep learning are used to accomplish understanding these days. EEG information. More and more people are getting sick from things that affect the brain, so scientists are developing EEG diagnostic tools for smart medical uses. Many people have recently studied extensively, with topics ranging from stroke and depression therapy to Alzheimer's illness and bleeding prevention. Emergency care and real-time patient monitoring are necessary for many medical conditions. For patients, the outcome might be disastrous and even fatal if services are delayed. Furthermore, sophisticated, dependable, and accurate smart healthcare systems should be able to diagnose these conditions. Medical representatives must to be able to access medical records and offer professional assistance as required. Smart health facilities and smart ambulances should be easily accessible in an emergency. The smart healthcare system uses EEG signals to diagnose pathologies, which allows us to circumvent the aforementioned difficulties. After receiving EEG pathology data, our system evaluates it and determines if it is normal or not. Any condition affecting the brain might cause an abnormal EEG. For analysis, our technology transfers real- time EEG data collected by multimodal Internet of Things smart sensors to a cloud server. To ascertain the patient's health status, the data on speech, movement, emotions, and EEG are pre-processed after collection. The patient's health status can be determined by pre- processing the recorded speech, movement, emotion, and electroencephalogram (EEG) signals. Once the data is received, the deep learning module sorts the EEG pathology data into normal and pathological categories. Once all of the data has been processed, the server notifies the working experts in the event that emergency treatment is required. By reviewing the patient's electronic medical records, the medical staff is able to remain informed about the patient's status.

Convolutional neural network technology is employed by the proposed EEG illness detection system CNN. Shoddy and deep CNN models are among those being studied. In order to facilitate transfer learning, the deep CNN model is employed. Over time, a multi-layer perceptron (MLP) is utilised to fuse parallel deep convolutional neural network (CNN) models. Whatever this study adds is this: We use three stages to improve detection precision: (i) using the CNN model to identify EEG disease; (ii) comparing the capacity of shallow and deep CNN models to identify EEG pathology; and (iii) merging deep CNN models using MLP.

II. RELATED WORK

A Few studies and perspectives on cognitive and smart healthcare, along with EEG-based pathology detection, are covered in this section.

Writers: Yixue Hao and Min Chen are suggested with the quick advancement of computer and medical technologies, there has been a rise in interest in the healthcare system from both industry and academics. Nevertheless, the majority of healthcare systems are unable to offer specialized users a personalized resource service and do not take patients' emergency situations into account. In this research, we propose a smart healthcare system that uses Edge- Cognitive- Computing (ECC) to solve this issue. The cognitive processing power of this system allows it to monitor and assess the physical well- being of its users. Not only that, but also adjusts the distribution of computing resources across the entire edge computing network according to the unique health risk scores of each user. The findings show that the healthcare system based on ECC improves patient survival rates during unknown emergencies, provides a better user experience, and uses computing resources effectively.

Shamim Hossain, a novelist, and Ghulam Muhammad are This paper proposes an automated emotion recognition system that operates on the edge cloud and uses privacy- preserving techniques. By secretly transmitting user voice and face image signals to many edge clouds, Internet of Things (IoT) devices can safeguard users' privacy. Basic signal processing is handled by the edge clouds prior to transmission to the core cloud. The central cloud makes use of a pre-trained model that is based on Convolutional Neural Networks (CNNs) to extract deep-learned features from visual and audio inputs. Drawing on the convolutional neural network (CNN) architecture, two deep sparse auto-encoders combine the signal features and augment them with extended non- linearity. When all the data is in, a support vector machine will sort it into the correct emotion classification. Two publicly available datasets where several tests were carried out using the proposed method are the RML database and the eNTERFACE'05 database. On the two databases, the suggested system's greatest recognition accuracies were 87.6% and 82.3%, respectively. These accuracies outperform those of other cutting-edge systems.

Author: It is suggested that Po Yang and Zhikun Deng The swift progression of Internet of Things (IoT) technology presents prospects for lifelogging data monitoring through a range of IoT assets, such as wearable sensors and mobile applications, among others. Unfortunately, lifelogging personal data contains a great deal of ambiguity and is rarely used for healthcare studies because of the heterogeneity of linked devices and the variety of life behaviors in an IoT environment. For the purpose of longitudinal health evaluation, lifelogging personal data must be effectively validated. The paper investigates the use of physical activity tracking devices to explore ways that health data collected through the Internet of Things (IoT) could be more reliably used. The goal of the rule-based adaptive lifelogging physical activity validation model (LPAV-IoT) is to eliminate uncertainty and provide reliable estimates in healthcare IoT contexts. To investigate important factors impacting the reliability of activity tracking in lifelogging, LPAV-IoT presents a method with four levels and three modules. We develop and test several validation criteria using reliability indicators and uncertainty threshold factors. The next step after LPAV-IoT is to perform a case study on an Internet of Things (IoT) enabled bespoke healthcare platform, MHA, which integrates three state-of-the-art wearables with mobile applications. In a customized Internet of Things (IoT) setting, the results demonstrate that LPAV- IoT rules can reliably filter out 75% of the irregular uncertainty and adaptively display the dependability of activity data from life logging devices.

Writers: Musaed Alhussein and Ghulam Muhammad are suggested with the advancement of machine learning technologies, particularly deep learning, the automated systems to assist human life are flourishing. In this paper, we propose an automatic electroencephalogram (EEG) pathology detection system based on deep learning. Various types of pathologies can affect brain signals. Thus, the brain signals captured in the form of EEG signals can indicate whether a person suffers from pathology or not. In the proposed system, the raw EEG signals are processed in the form of a spatio-temporal representation. The spatio-temporal form of the EEG signals is the input to a convolutional neural network (CNN). Two different CNN models, namely, a shallow model and a deep model, are investigated using transfer learning. A fusion strategy based on a multilayer perceptron is also investigated. The experimental results on the Temple University Hospital EEG Abnormal Corpus v2.0.0 show that the proposed system with the deep CNN model and fusion achieves 87.96% accuracy, which is better than some reported accuracy rates on the same corpus.

III. BACKGROUND KNOWLEDGE

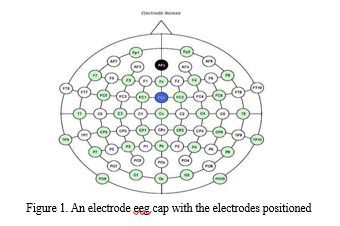

In a simple electroencephalogram (EEG) operation, electrodes are placed on the scalp to detect the electric current that neurons in the brain generate. This allows for a better understanding of the brain's function. A single wire connects each electrode so that they can measure voltage changes or electric potential variations brought about by the flow of ionic currents in neurons in the brain. Different frequencies are exhibited by EEG signals due to the fact that they can be classified into five primary bands. The amplitudes and relative intensities of the different EEG frequency bands can aid in the diagnosis of neurological and brain disorders, as these bands are associated with different brain processes and functions. As illustrated in Figure 1, electrodes are affixed to particular areas of the head in accordance with a 10-20 worldwide standard in order to record EEG signals. This ensures that laboratory procedures are consistent worldwide. After passing through amplifiers and filters, the electroencephalogram (EEG) signal from the electrodes is finally presented. The frequency range of electroencephalogram (EEG) signals used to measure brain activity is typically between 0.1Hz and 100 Hz, as illustrated in Figure 1.

Problems with contaminant removal, processing and analysis of signals, individual differences, interpretation, and validation are further issues with EEG processing. Electroencephalography (EEG) is a diagnostic and monitoring tool for a variety of neurological and psychiatric illnesses, including epilepsy and sleep problems, despite these types of problems.. The connectivity and operation of the brain, including language, memory, and attention, are also studied using EEG. EEG is currently a vital tool in neurological and therapeutic research as a result.

A. Existing Model

The existing methodology outlined in the study introduces a robust approach for brain tumor detection in magnetic resonance images (MRIs) using a fine-tuned EfficientNet model. The process begins with preprocessing steps, including image normalization, resizing, blurring, and high-pass filtering, to enhance image quality and extract relevant features. Data augmentation techniques are then applied to increase the dataset's size and diversity, crucial for training deep learning models effectively.

The core of the methodology lies in implementing the EfficientNet-B0 model, a convolutional neural network (CNN) architecture optimized for both efficacy and computational efficiency. This model is fine-tuned on the brain tumor MR image dataset, leveraging transfer learning from pre-trained weights on the ImageNet dataset.

Through transfer learning, the model inherits knowledge about basic image features, which significantly accelerates training and improves performance. The fine-tuning process involves adjusting the model's last layers to adapt to the specific task of brain tumor detection. Hyperparameters such as optimizer choice (Adam), learning rate, batch size, and loss function (binary cross-entropy) are carefully selected to optimize training efficiency and accuracy.

The performance of the proposed model is evaluated using various metrics, including accuracy, precision, recall, F1-score, sensitivity, specificity, and the area under the receiver operating characteristic curve (AUC). Experimental results demonstrate the effectiveness of the proposed model in accurately classifying brain MR images, with high AUC indicating its ability to distinguish between tumor and non-tumor cases.

Additionally, confusion matrices provide insights into the model's classification performance, while ROC curves visualize its overall discriminatory power. Overall, the proposed methodology offers a comprehensive framework for automated brain tumor detection in MRI scans, leveraging the power of deep learning and transfer learning techniques for enhanced diagnostic accuracy.

IV. METHODOLOGY

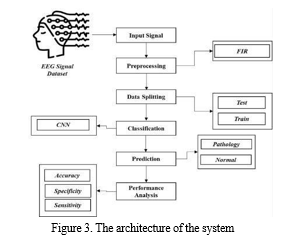

The EEG signal dataset used in the proposal was gathered from a dataset repository. The preprocessing stage can then be put into practice. In this phase, we can use FIR (Finite Impulse Response) to eliminate undesired noise from the input signal. Separating the dataset into test and train sets is the next step. Forecasting makes use of

test data, while assessment makes use of train data. After that, we can put deep learning algorithms like CNN (Convolutional Neural Network) into practice. Finally, accuracy, precision, recall, and f1 score are among the performance measures that can be estimated. At last, we can categorize the EEG signal input as either pathological or non-pathological.

A. Input Signal

An efficient method that can be used to detect various states is EEG, which involves collecting brain waves from the scalp. The signals are primarily grouped into five types: groupings, gamma, alpha, beta, and delta. The signal frequencies of these signals vary from 0.1 Hz to over 100 Hz. The act of choosing the input signal to determine whether or not to classify the pathology is known as data selection. Using the panda packages, we must read the dataset in Python. Our dataset is saved as files with the ending ".dat."

B. Preprocessing And Filtering

The impulse response of a finite impulse response (FIR) filter in signal processing is of finite duration since it reaches zero in a finite amount of time. An input of finite length determines the filter's behavior. Since this type of filter does not include a feedback loop, we refer to the impulse response as "finite." Zeroes will eventually be output if you input an impulse as previously mentioned, once the "1" valued sample has passed through all of the filter coefficients in the delay line. An Nth order discrete-time FIR filter, that is, one with a Kronecker delta impulse input, experiences an impulse response that lasts for N + 1 samples before zeroing out. Discrete-time or continuous-time, digital or analog FIR filters are both possible. It is essential to preprocess and appropriately filter the raw EEG signals to remove artefacts and noise before using them for feature extraction and classification. This will improve the extraction of important information. Electroencephalogram (EEG) data are biological indicators of neural activity. nevertheless, because of their low amplitude and great temporal variability, they are susceptible to outside influence during data collection. This interference can be caused by blinking, eye movement, ECG, and EMG. These interferences are often referred to as artifacts.

C. Data Splitting

In machine learning, data is king when it comes to learning. In addition to training data, test data are essential for evaluating the algorithm's performance. Just 30% of the dataset was utilised for testing purposes, while 70% was kept aside for training. Splitting the information accessible in split is known as data splitting, and it's usually done for cross- validation. After using some of the data to build a prediction model, its efficacy is evaluated with the rest of the data.

D. Feature Extraction And Classification

Long recordings across several channels are a common feature of EEG data, yielding massive volumes of information. The objective of feature extraction is to simplify the dataset by identifying its attributes. In regard to benefits, this method offers less problems and overfitting threats. In order to research brain activity, EEG recordings are often obtained from both healthy and sick individuals, which results in a significant amount of data that can be studied.

EEG signal characteristics are values that represent signal qualities found at sampling speeds between 100 and 1000 Hz. Subsequently, the various features are combined to generate a feature vector. To extract features from an EEG signal or set of data, multiple methods need to be applied. Following feature extraction, we would be able to determine whether or not the individual had a brain pathology.

E. Dataset

In addition to tracking their heart rates and an electroencephalogram, all participants rated the films with an A+. Both the labels and the data for each channel are separated into separate files in this assignment. For every trail, per individual, data from every channel is kept in a column arranged row-by-row vs time.

Kaggle is the source of the data: https://www.kaggle.com/datasets/samnikolas/eeg- dataset?resource=download

F. Advantages

It produces an excellent level of accuracy when seen with current methods. This works for a tons of datasets. First, the input signal is cleaned up to remove any unwanted noise. When it comes to deep learning algorithms, convolutional neural networks (CNNs) are among the best for image recognition and processing jobs. It contains a number of layers, including convolutional, pooling, and fully connected models.

Crucial to convolutional neural networks (CNNs) are their convolutional layers, which use filters toextract features such as edges, textures, and shapes from the images being used. To reduce the number of spatial dimensions while preserving the most important data, the feature maps are down-sampled after the output of the convolutional layers has been processed through pooling layers. The output of the pooling layers is subsequently passed on to one or more fully connected layers for the purpose of image categorization or prediction. In order to train CNNs to recognize patterns and traits associated with specific objects or categories, a large collection of designated visuals is utilized. After training, a convolutional neural network (CNN) may recognize objects in images, divide them into several groups, or extract useful features for further processing.

Convolutional neural networks (CNNs) have shown world- class performance on several image recognition tasks, including object detection, image segmentation, and object classification. Security systems, autonomous vehicles, and medical imaging are among of the places you might find them used. They find widespread application in fields connected to computer vision and image processing. Deep learning neural networks that are specifically built to deal with structured data arrays, such as representations, are called convolutional neural networks (CNNs). Neural network feature recognition (NNRS) does an excellent task of detecting various design aspects in the input image, including lines, gradients, circles, features, and even eyes. Due to this characteristic, convolutional neural networks are highly dependable when it comes to computer vision.

CNN is able to work directly on underexposed images without requiring any preparation. A convolutional neural network is a feedforward neural network, and it is rare to see networks with more than 20 layers. An important component of every convolutional neural network is the convolutional layer. CNNs are constructed using many convolutional layers, which can detect progressively more intricate structures as they are stacked on top of one another. With 25 convolutional layers, it is possible to differentiate between human faces and handwritten numbers with 3 or 4 convolutional layers. The objective of this area of study is to train computers to approximate human visual perception and cognition in a variety of contexts, such as recommendation systems, media recreation, image and video acknowledgment, image inspection and classification, and natural language processing.

V. RESULTS AND DISCUSSION

We found encouraging results in our investigation into the identification of brain pathology using electroencephalogram (EEG) data, suggesting the possibility of precise abnormality detection. More specifically, our technique allowed for efficient classification by identifying unique patterns in EEG signals that corresponded to various brain diseases. These results imply that non-invasive brain illness diagnosis and monitoring may benefit from EEG-based techniques.

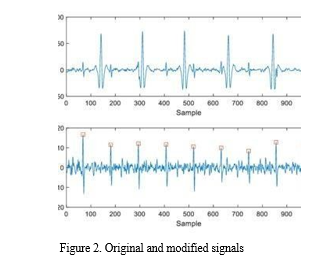

Filter coefficients and frequency response are thus shown as a graph in the result. The values that define the form and properties of the frequency response of a Finite Impulse

Response (FIR) filter are called filter coefficients. The weighting that is applied to each input sample during the filter's convolution process is represented by these coefficients. Usually, the desired frequency response of the filter—such as low-pass, high-pass, band-pass, or bandstop— determines the values of these coefficients. They are frequently calculated using a variety of design strategies, including frequency sampling, windowing, and optimization approaches like the Parks-McClellan algorithm. During the convolution process, a matching delayed input sample is subjected to a coefficient value represented by each tap. The frequency response and features of a 74-tap FIR filter are determined by 74 coefficients. The frequency resolution, computational complexity, and capacity of the filter to provide desired filtering characteristics are all impacted by the number of taps.

VI. FUTURE ENHANCEMENT

We would aim to combine the two distinct machine learning approaches in the future. To reach even higher performance, the suggested clustering and classification methods may be extended or modified in the future. In addition to the successful combination of data mining techniques, further combinations and alternate clustering methods can be used to improve the detection accuracy. This study presents a thorough examination of the state of EEG processing for medical diagnosis at the moment. Scholars researching EEG processing in relation to medical diagnosis might use it as a resource as it offers an in-depth analysis of several important research projects. It also provides direction for future research on EEG analysis in the medical domain.

Conclusion

We deduce that the EEG signal dataset used for input was obtained from a dataset repository. We have created deep learning algorithms similar to CNN and classification algorithms like them. In conclusion, the outcome indicates that certain performance indicators, including accuracy, specificity, and sensitivity. This article examines the use of several important technologies and the healthcare framework. Additionally, a number of crucial edge and core functionalities are worked out. An EEG based pathology detection is built and analyzed as a case study. This page analyzes EEG qualities, describes how those parameters are extracted, and weighs the benefits and drawbacks of each method. The most recent advancements in feature engineering and classification techniques are also examined in this paper. Many scholarly articles have provided the underlying material for these methods and the associated conclusions. Using an EEG signal dataset, we have constructed CNN for brain tumors.

References

[1] M. Chen et al., “Edge Cognitive Computing Based Smart Healthcare System,” Future Generation Computer Systems, vol. 86, 2018, pp. 403–11. [2] G. Muhammad et al., “Edge Computing with Cloud for Voice Disorders Assessment and Treatment,” IEEE Commun. Mag., vol. 56, no. 4, Apr. 2018, pp. 60–65 [3] P. Yang et al., “Lifelogging Data Validation Model for Internet of Things Enabled Personalized Healthcare,” IEEE Trans. Systems, Man, and Cybernetics: Systems, vol. 48, no. 1, Jan. 2018, pp. 50–64. [4] M. Alhussein, G. Muhammad, and M. S. Hossain, “EEG Pathology Detection based on Deep Learning,” IEEE Access, vol. 7, no. 1, Dec. 2019, pp. 27,781–788. [5] Y. Hao et al., “Smart-Edge-CoCaCo: AI-Enabled Smart Edge with Joint Computation, Caching, and Communication in Heterogeneous IoT,” IEEE Network, vol. 33, no. 2, Mar./Apr. 2019, pp. 58–64. [6] W. Shi et al., “Edge Computing: Vision and Challenges,” IEEE Internet of Things J., vol. 3, no. 5, 2016, pp. 637–64. [7] M. S. Hossain and G. Muhammad, “An Audio-Visual Emotion Recognition System Using Deep Learning Fusion for Cognitive Wireless Framework,” IEEE Wireless Commun., vol. 26, no. 3, June 2019, pp. 62–68. [8] M. A. Salahuddin, A. Al-Fuqaha, and M. Guizani, “Software-Defined Networking for RSU Clouds in Support of the Internet of Vehicles,” IEEE Internet of Things J., vol. 2 no. 2, 2015, pp. 133–44. [9] J.Wanga et al., “A Software Defined Network Routing in Wireless Multihop Network, “J. Network and Computer Applications, vol. 85, n0. 2017, May 2017, pp. 76-83. [10] M. S. Hossain and G. Muhammad, “Emotion-Aware Connected Healthcare Big Data Towards 5G,” IEEE Internet of Things J., vol. 5, no. 4, Aug. 2018, pp. 2399–2406 [11] M. S. Hossain et al., “Applying Deep Learning to Epilepsy Seizure Detection and Brain Mapping,” ACM Trans. Multimedia Comp. Commun. Appl., vol. 15, Jan. 2019, p. 10. [12] C. Szegedy et al., “Going Deeper with Convolutions,” 2015 IEEE Conf. Computer Vision and Pattern Recognition, Boston, MA, 2015, pp. 1–9. [13] A. A. Amory et al., “Deep Convolutional Tree Networks,” Future Generation Computer Systems, vol. 101, Dec. 2019, pp. 152–68. [14] J. Picone and I. Obeid, “Temple University Hospital EEG Data Corpus,” Frontiers Neurosci., vol. 10, May 2016, p. 196. [15] S. U. Amin et al., “Cognitive Smart Healthcare for Pathology Detection and Monitoring,” IEEE Access, vol. 7, 2019, pp. 10,745–53. [16] D. L. Schomer and F. L. Da Silva, Niedermeyer’s Electroencephalography: Basic Principles, Clinical Applications, and Related Fields. Philadelphia, PA, USA: Lippincott Williams & Wilkins, 2012. [17] D. A. Engemann, F. Raimondo, J.-R. King, B. Rohaut, G. Louppe, F. Faugeras, J. Annen, H. Cassol, O. Gosseries, D. Fernandez-Slezak,S. Laureys, L. Naccache, S. Dehaene, and J. D. Sitt, ‘‘Robust EEG-based cross-site and crossprotocol classification of states of consciousness,’’Brain, vol. 141, no. 11, pp. 3179–3192, Nov. 2018. [18] N. S. Bastos, B. P. Marques, D. F. Adamatti, and C. Z. Billa, ‘‘Analyzing EEG signals using decision trees: A study of modulation of amplitude,’’Comput. Intell. Neurosci., vol. 2020, pp. 1–11, Jul. 2020. [19] J. T. Giacino, J. J. Fins, S. Laureys, and N. D. Schiff, ‘‘Disorders of consciousness after acquired brain injury: The state of the science,’’Nature Rev. Neurol., vol. 10, no. 2, pp. 99–114, Feb. 2014. [20] S. Hagihira, ‘‘Changes in the electroencephalogram during anaesthesia and their physiological basis,’’ Brit. J. Anaesthesia, vol. 115, pp. 27–31, Jul. 2015.

Copyright

Copyright © 2024 B Bhaskara Rao, M. Lakshmi Prasanna , K. RaghaLikitha, P. S. Vyshnavi , A. Subhasree. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET60352

Publish Date : 2024-04-15

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online